Chainbase Launches Verifiable Data Network Integrating ZK Proofs for AI Models

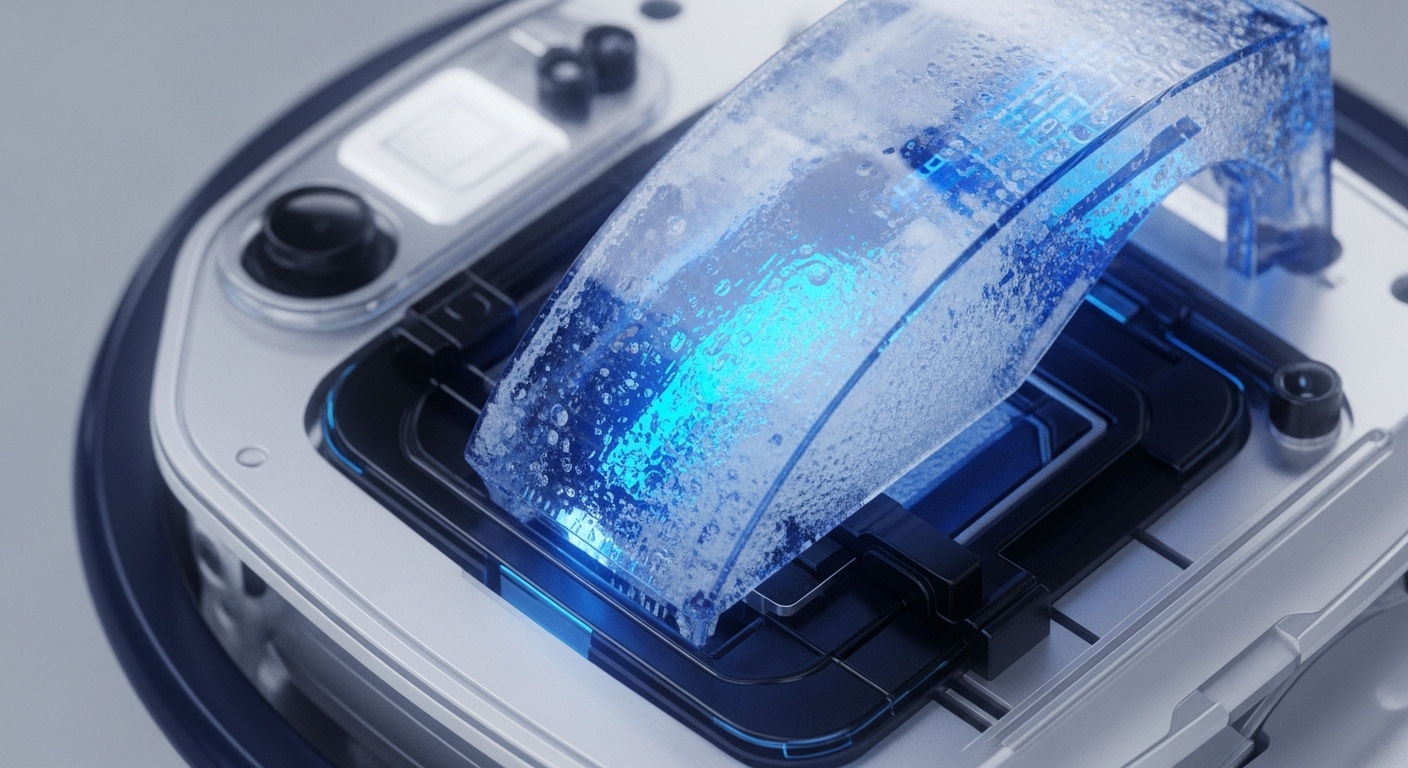

Chainbase's ZK-verified data pipeline establishes the foundational trust layer necessary for decentralized AI model training.