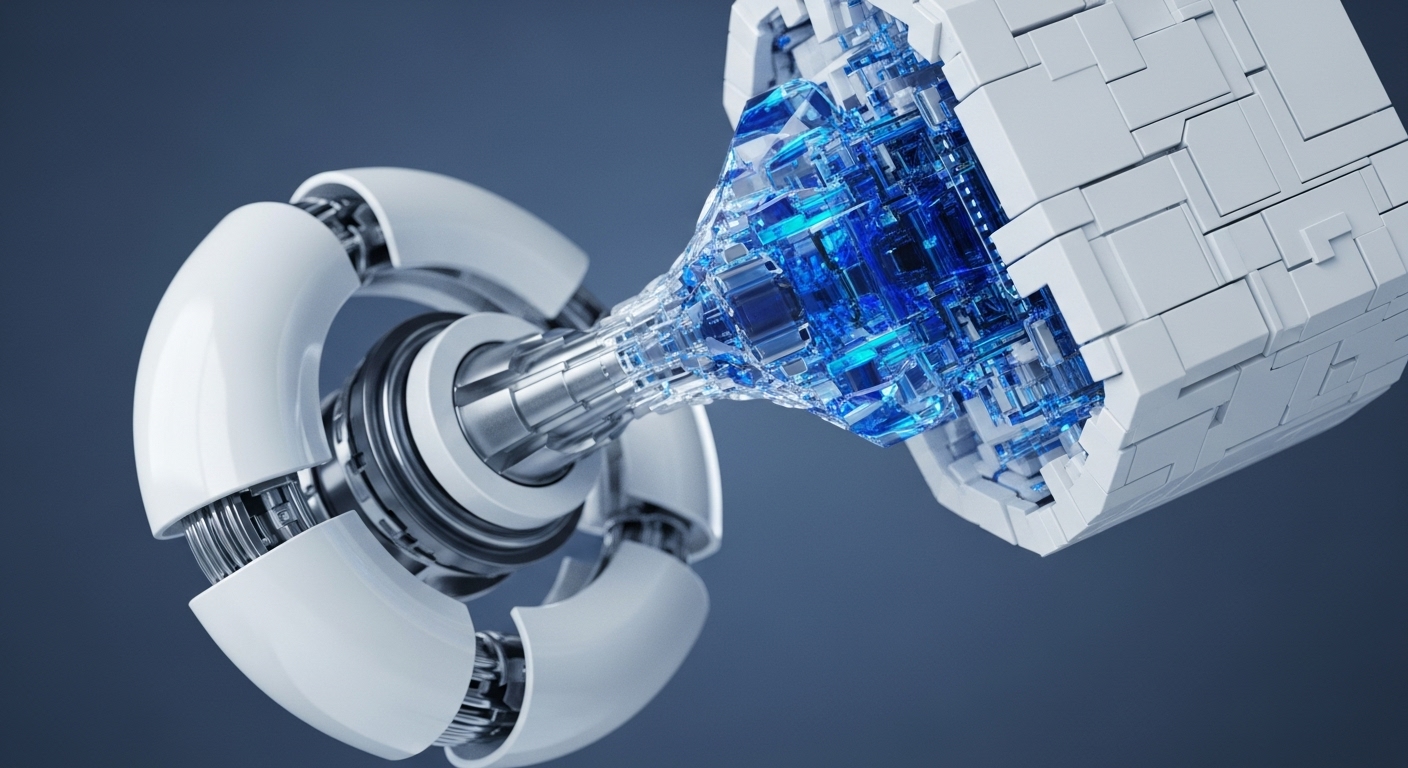

Functional Commitments Verify Program Output without Revealing Logic

This new Functional Commitment Scheme allows committing to a program's logic while efficiently proving its output, enabling private, verifiable outsourced computation.

Sublinear Zero-Knowledge Proofs Democratize Verifiable Computation and Privacy

Sublinear memory scaling for ZKPs breaks the computation size bottleneck, enabling universal verifiable privacy on resource-constrained devices.

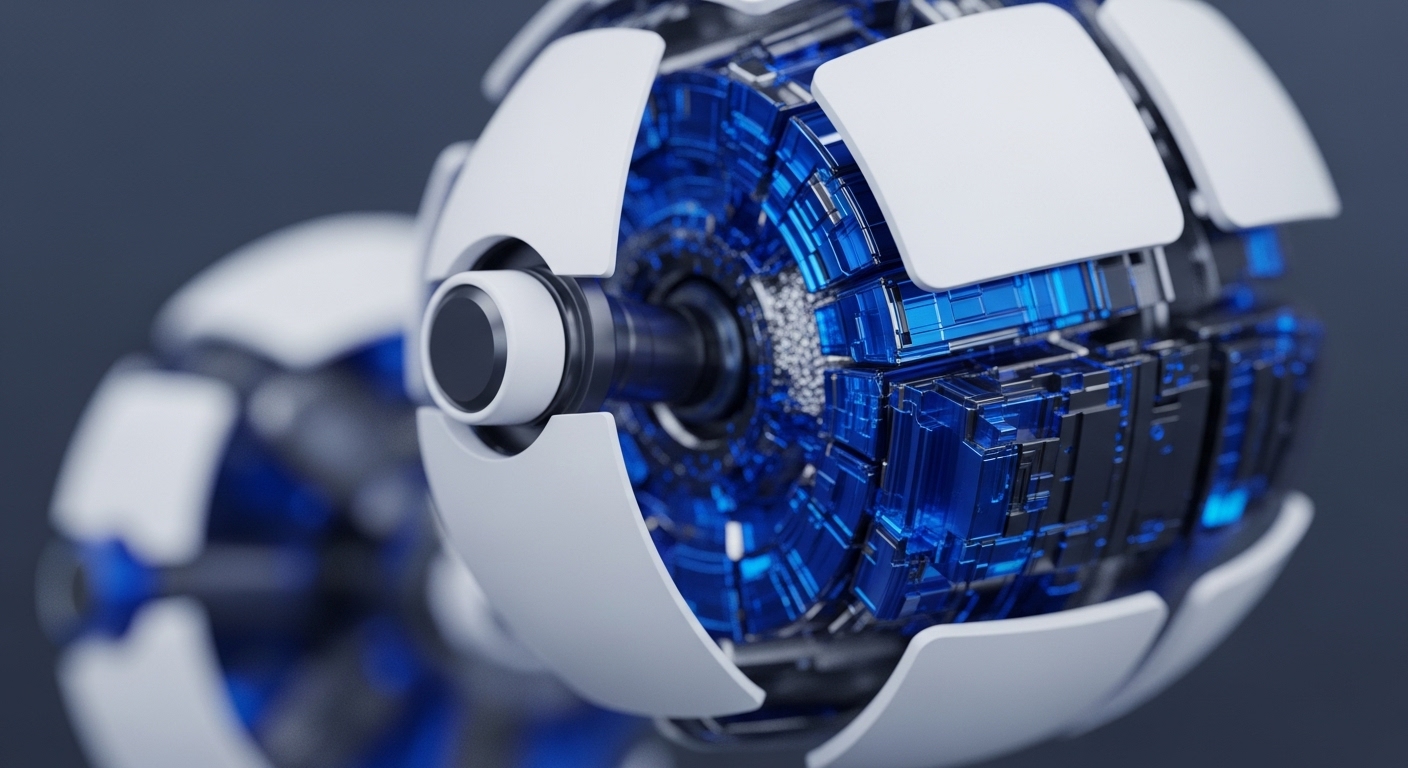

Distributed Zero-Knowledge Proofs Decouple Prover Efficiency from Centralization Risk

New fully distributed ZKP schemes cut prover time and communication to $O(1)$, decentralizing zkRollup block production and boosting throughput.

Succinct Timed Delay Functions Enable Decentralized Fair Transaction Ordering

SVTDs combine VDFs and succinct proofs to create a provably fair, time-locked transaction commitment, mitigating sequencer centralization risk.