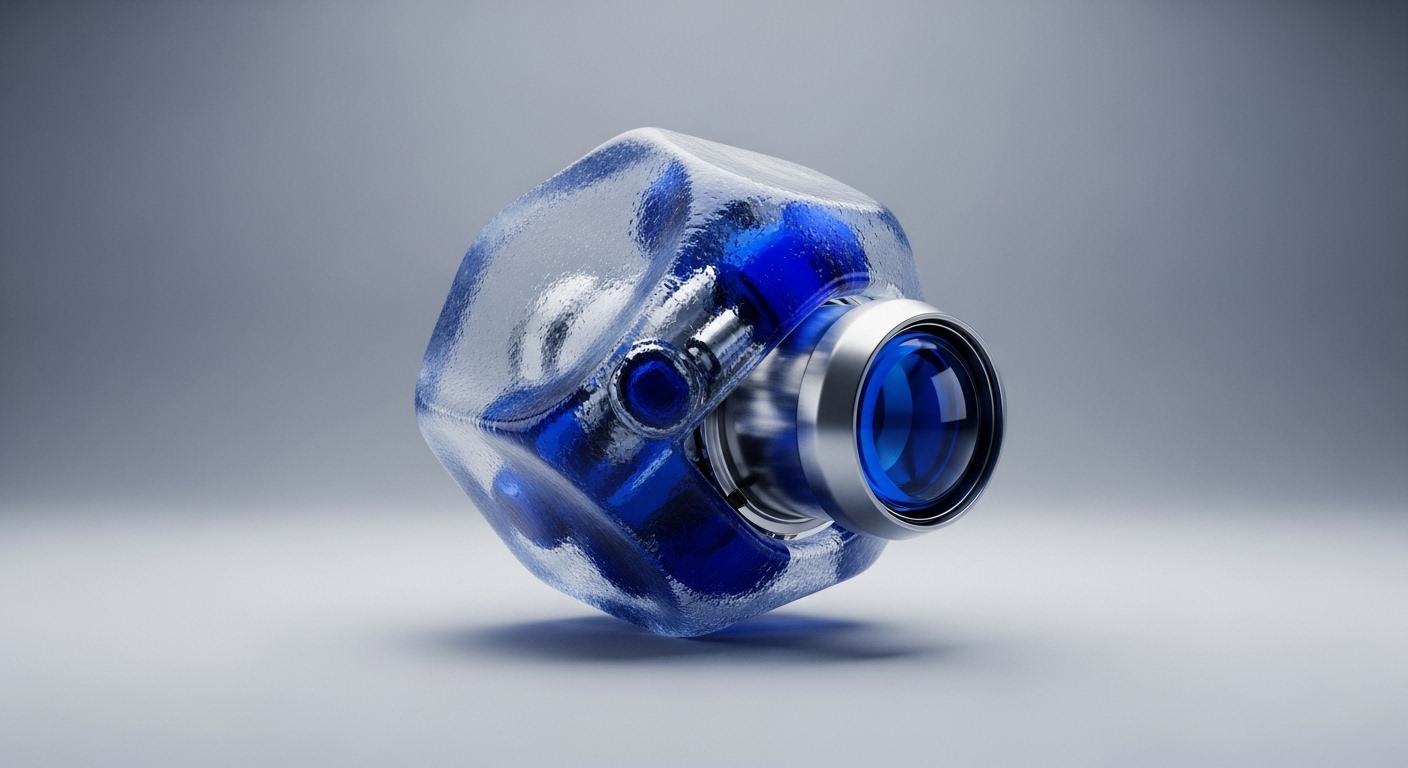

Commit-and-Prove Zero-Knowledge Reduces Space Complexity for Large Circuits

Commit-and-Prove ZK is a new cryptographic primitive that enables memory recycling, dramatically reducing space complexity for large-scale verifiable computation.

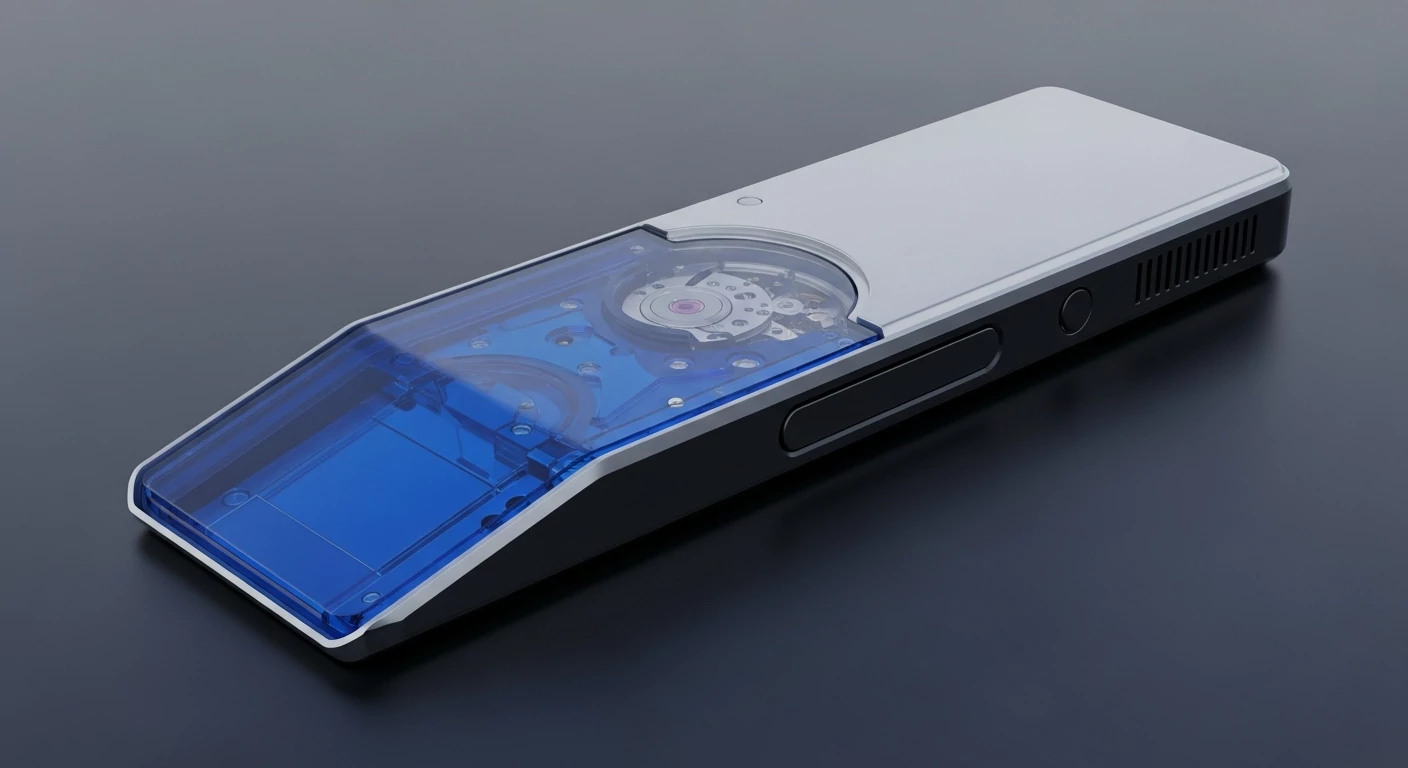

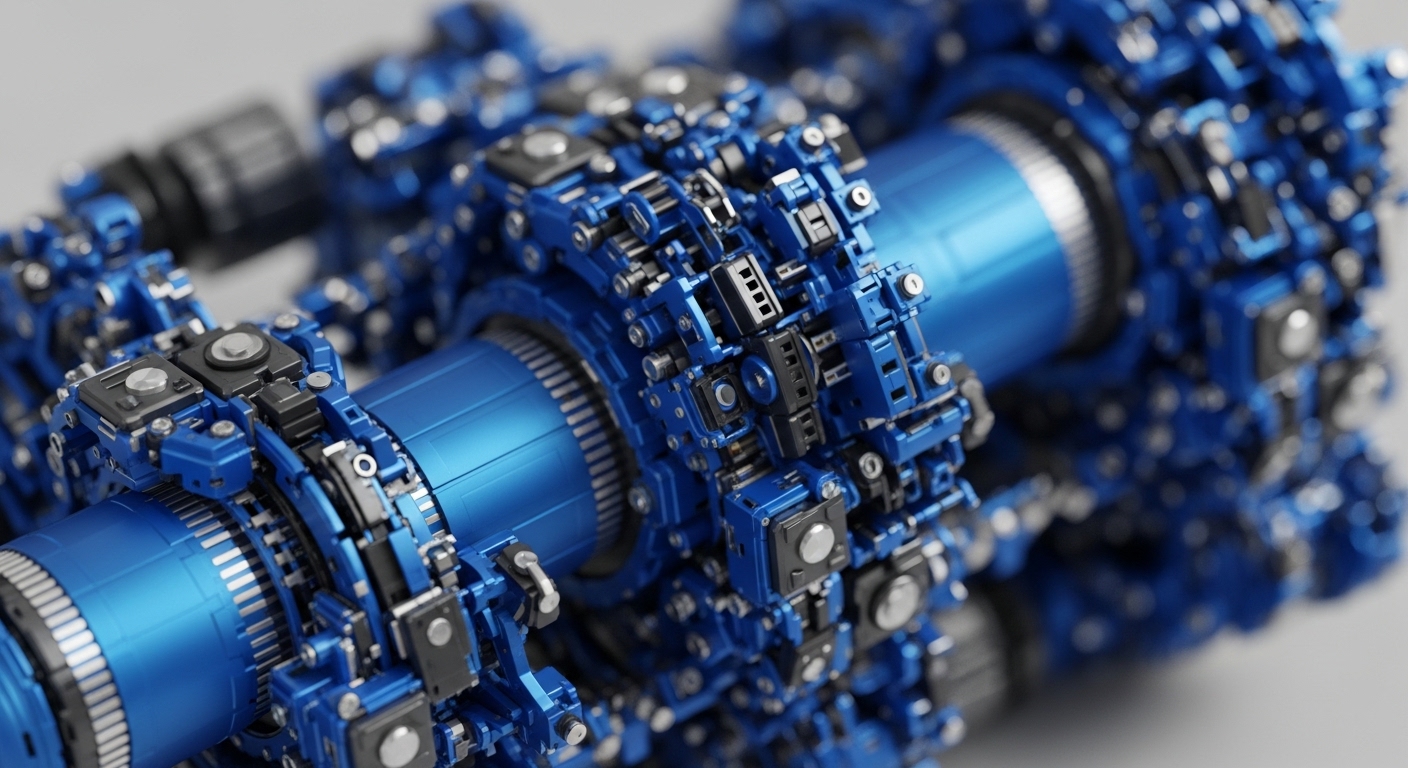

Zkspeed Hardware Dramatically Accelerates HyperPlonk Proving for Ubiquitous Verifiable Computation

A dedicated hardware accelerator for HyperPlonk achieves $801times$ speedup, fundamentally resolving the ZKP prover time bottleneck for scalable decentralized systems.

Distributed Verifiable Computation Secures Input Privacy and Fault Tolerance

A new distributed verifiable computation primitive guarantees input privacy and result recovery against colluding workers using cryptographic encoding.

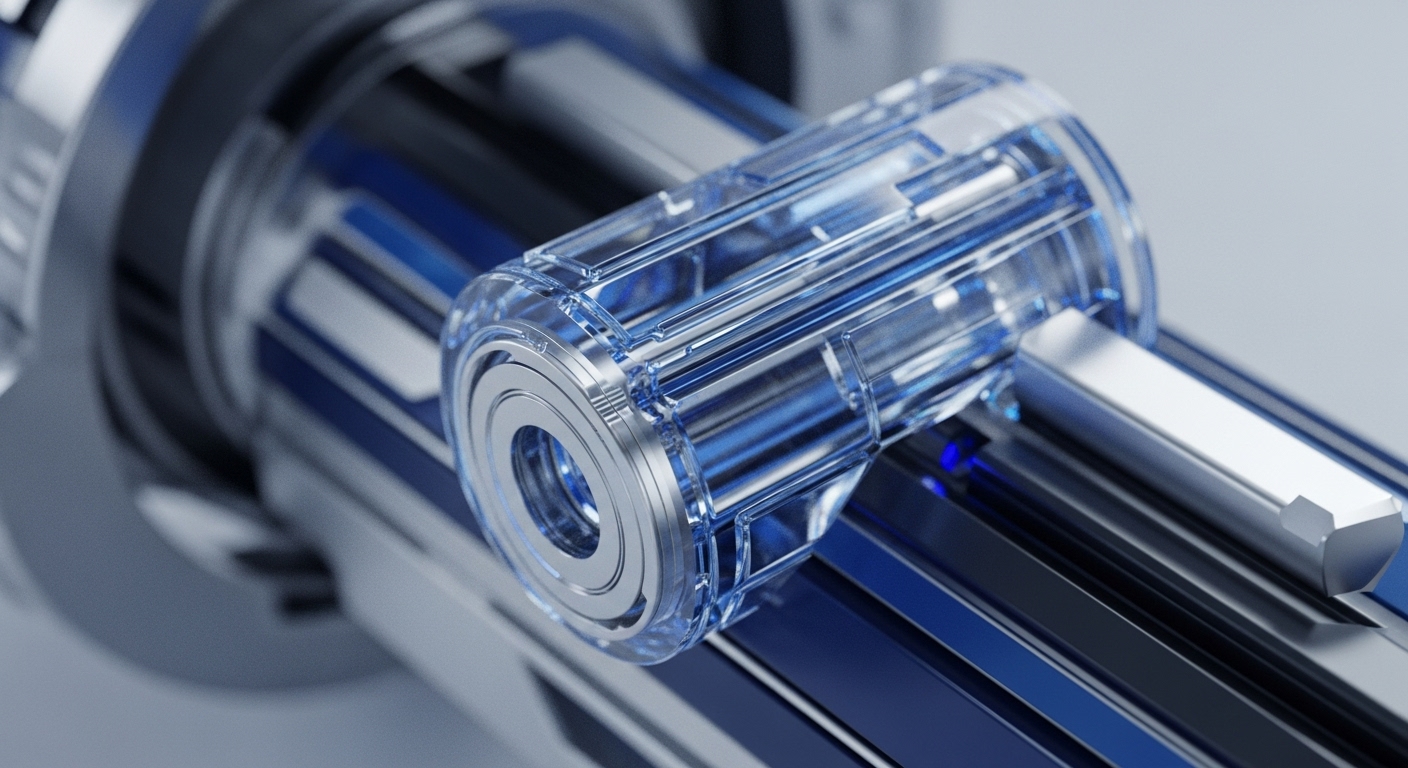

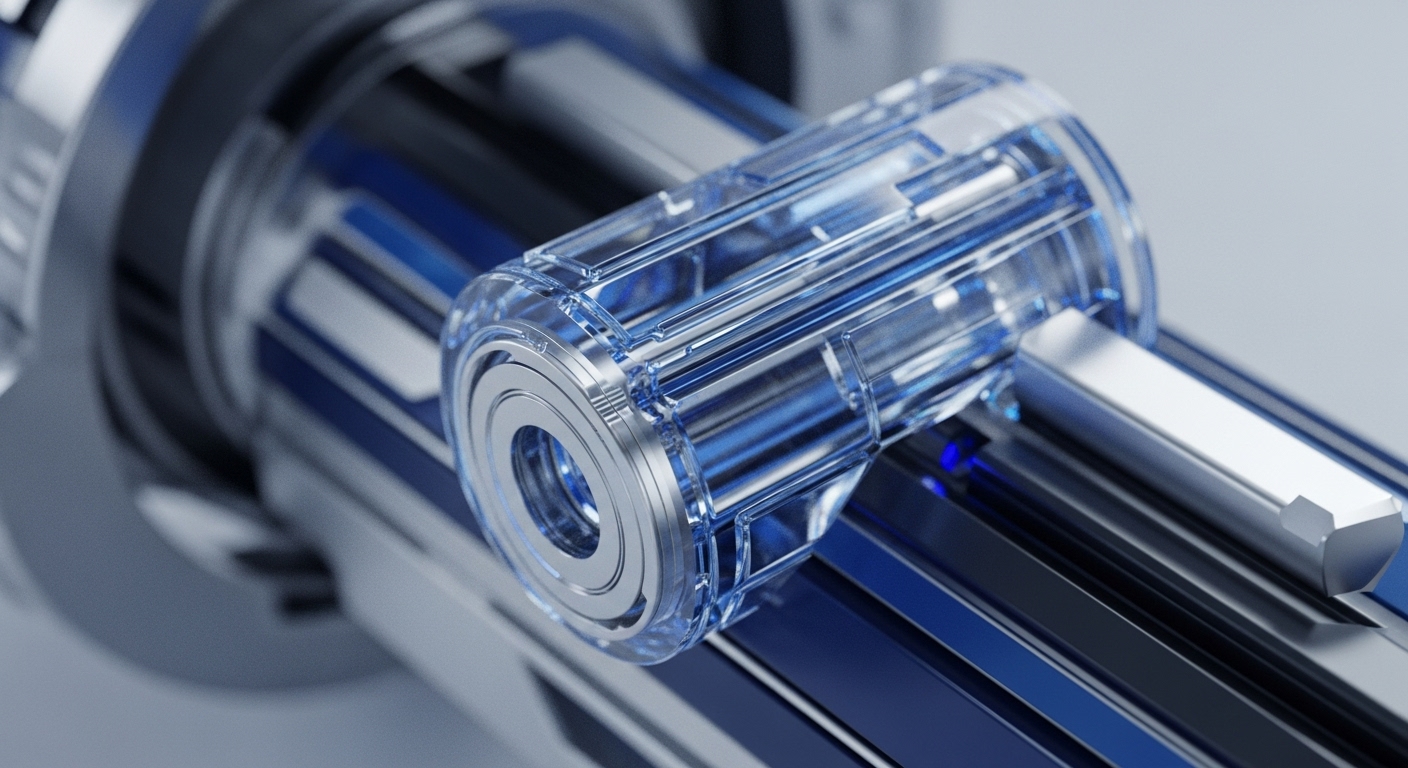

Recursive Proof Composition Enables Infinite Scalability and Constant Verification

Recursive proof composition collapses unbounded computation history into a single, constant-size artifact, unlocking theoretical infinite scalability.