Tensora Launches AI-powered Layer 2 Rollup for Decentralized Machine Intelligence Marketplace

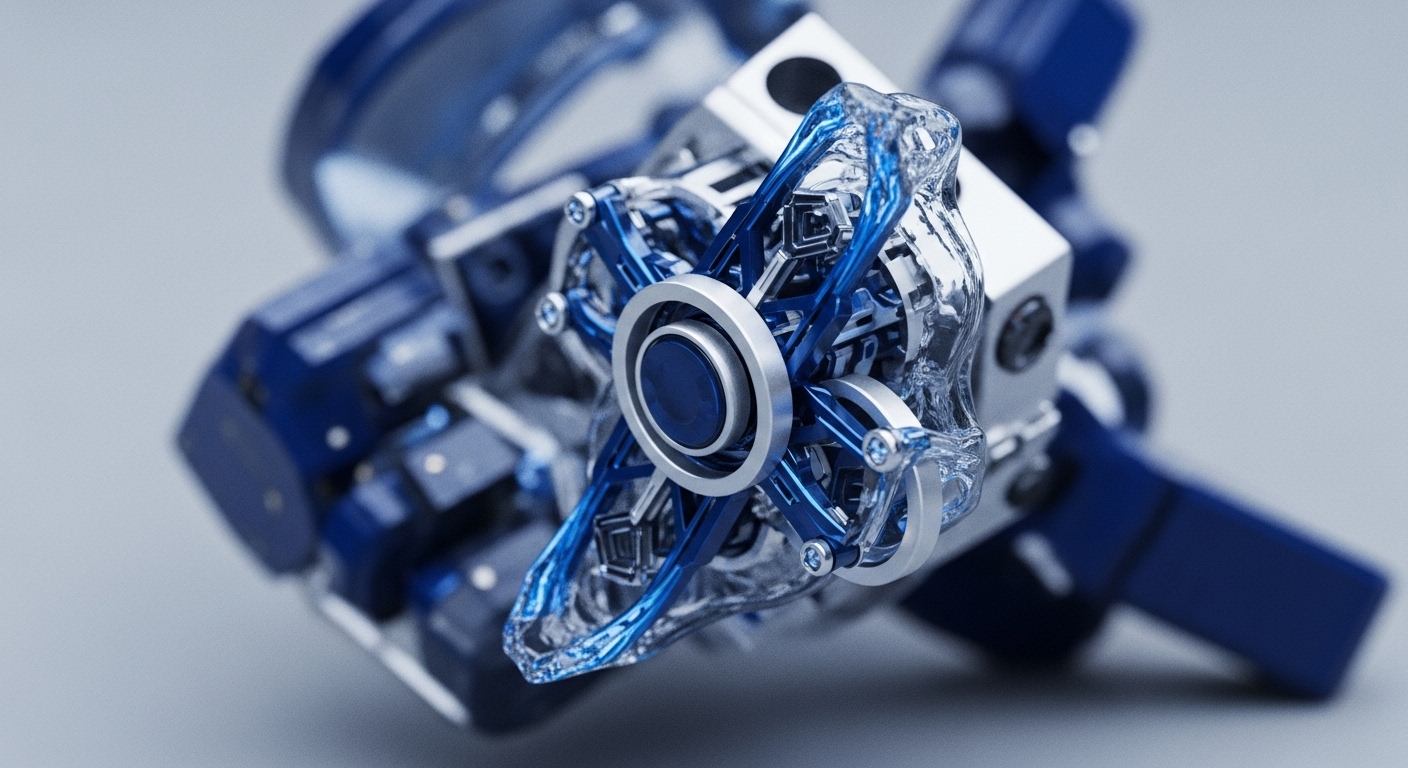

The Tensora L2 leverages OP Stack modularity to abstract AI computation onto BNB Chain, establishing a new primitive for decentralized intelligence markets.