Briefing

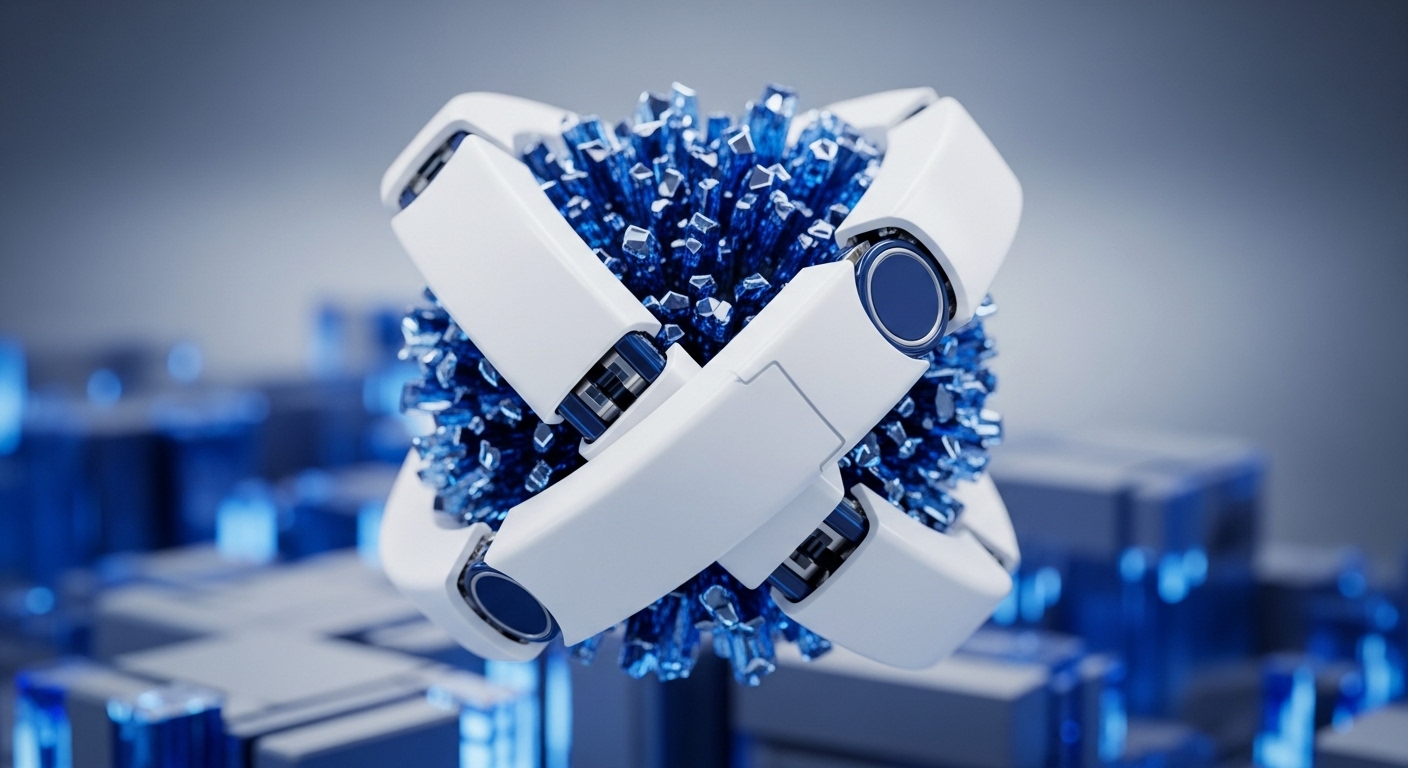

Large-scale machine learning requires distributed computing for efficiency and scalability, yet faces significant challenges in ensuring user data privacy and maintaining computational integrity against malicious participants. This research introduces “consensus learning,” a novel distributed machine learning paradigm that integrates classical ensemble methods with robust consensus protocols deployed in peer-to-peer systems. The mechanism involves two phases → participants first develop individual models and submit predictions, followed by a communication phase governed by a consensus protocol to aggregate these predictions. This approach fundamentally redefines how distributed machine learning systems can achieve both user data privacy and robust security against Byzantine attacks, offering a new blueprint for decentralized AI architectures.

Context

Before this research, traditional centralized machine learning and even many distributed ensemble methods often struggled with preserving individual data privacy and ensuring computational integrity when participants were untrusted or malicious. The inherent trade-offs between scalability, privacy, and robustness in distributed systems have historically limited the deployment of truly decentralized, secure, and private machine intelligence at scale. Existing distributed learning approaches frequently lacked explicit, fault-tolerant mechanisms for aggregating model outputs in adversarial environments, leaving systems vulnerable to data breaches or manipulated results.

Analysis

Consensus learning introduces a two-stage process for distributed machine learning. Initially, each participant independently develops a local model and generates predictions for new data inputs. Subsequently, these individual predictions become inputs for a communication phase.

This phase is governed by a robust consensus protocol, ensuring that all participants agree on a final aggregated prediction. This method diverges from prior distributed learning approaches by explicitly embedding a fault-tolerant consensus mechanism into the aggregation of individual model outputs, thereby guaranteeing both data privacy and resilience to adversarial behavior within the distributed network.

Parameters

Outlook

This foundational work establishes a new direction for secure and private distributed machine learning. Future research will likely explore the optimization of the underlying consensus protocols for various network conditions and the formal verification of privacy guarantees across different data distributions. In 3-5 years, this paradigm could enable highly resilient and privacy-preserving federated AI systems, powering decentralized autonomous agents that learn collaboratively without compromising sensitive user data, and fostering new applications in secure multi-party computation for AI.