Briefing

The core research problem is the quadratic overhead inherent in current 2D Reed-Solomon encoding used for Data Availability Sampling (DAS), which fundamentally limits the maximum size and economic feasibility of decentralized data blocks. This paper proposes the Hyper-Dimensional Commitment (HDC), a novel cryptographic primitive that encodes data as a $k$-dimensional tensor, $k>2$, leveraging multi-linear polynomial commitments to achieve sub-quadratic asymptotic complexity in commitment generation and verification. This breakthrough decouples data security from quadratic cost, providing a foundational mechanism to significantly boost the data throughput capacity of all rollup and sharding architectures.

Context

Before this research, the standard approach to the Data Availability Problem relied on 2D Reed-Solomon erasure coding, where a block is encoded into a square matrix, requiring a quadratic increase in data size to ensure a high probability of successful sampling. This established theoretical limitation imposed a hard, non-linear cost on scaling the data layer, forcing a trade-off between the security guarantee of DAS and the economic cost of data storage and computation for block producers.

Analysis

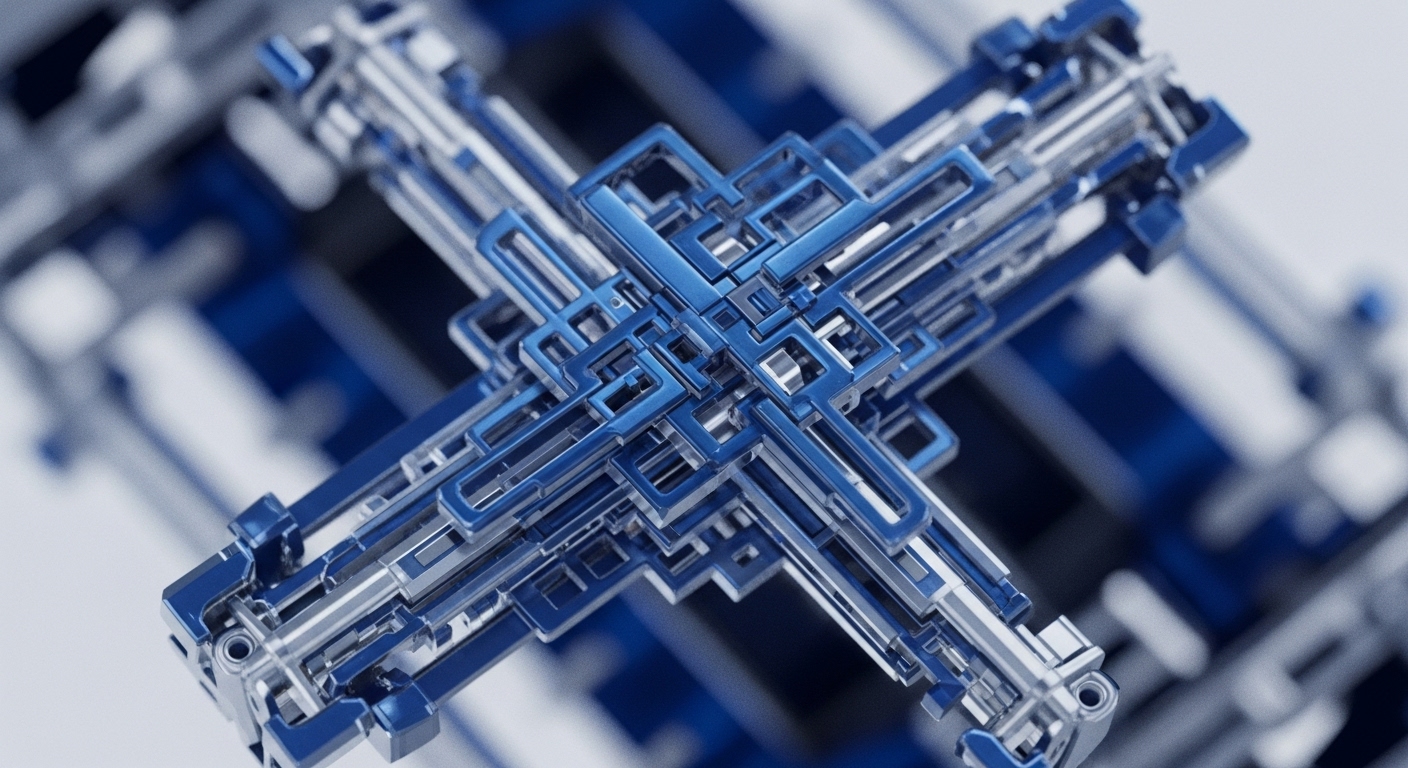

The Hyper-Dimensional Commitment (HDC) fundamentally shifts the data encoding model from a planar (2D) matrix to a spatial ($k$-dimensional) tensor. Conceptually, previous methods committed to data along two axes, but HDC commits along $k$ axes simultaneously. This structure allows a sampler to verify the integrity of the entire data set by taking a small, constant number of samples across multiple dimensions. The mechanism’s power lies in the geometric property that sampling in higher dimensions exponentially increases the probability of detecting a malicious encoding with fewer queries, thereby achieving a sub-quadratic $O(N^{1+epsilon})$ complexity for the commitment process, a significant asymptotic improvement over the previous $O(N^2)$ bound.

Parameters

- Asymptotic Complexity Reduction → $O(N^{1+epsilon})$ (The new complexity bound for commitment generation, where $epsilon$ is a small constant, compared to the previous $O(N^2)$.)

- Dimensionality Factor → $k > 2$ (The number of dimensions used in the new polynomial commitment scheme, which enables the sub-quadratic complexity.)

- Sampling Efficiency Gain → $> 50%$ (The theoretical reduction in the number of required samples to achieve the same security guarantee as 2D encoding, directly translating to lower overhead.)

Outlook

This new commitment primitive opens a crucial avenue for research into practical, production-ready implementations of hyper-dimensional encoding, specifically focusing on optimizing the multi-linear polynomial arithmetic for real-world hardware. In the next three to five years, this theory is poised to unlock “Tera-byte Scale” data availability layers, fundamentally transforming rollup architecture by enabling them to handle orders of magnitude more throughput while maintaining the same high security guarantees of decentralization and data availability.

Verdict

The Hyper-Dimensional Commitment is a foundational breakthrough that redefines the asymptotic limits of decentralized data availability, enabling the next generation of hyper-scalable blockchain architectures.