Briefing

The core research problem is the computational infeasibility of generating zero-knowledge proofs for the massive arithmetic circuits inherent in Large Language Model (LLM) inference, a fundamental barrier to decentralized, trustless AI. The breakthrough is the introduction of the Incremental Vector Commitment (IVC) scheme, which allows the prover to commit to the model weights once and generate constant-size proofs for each layer’s computation. This new mechanism fundamentally decouples the proof generation time from the total model size, establishing that provably correct and private AI computation can now scale to models with billions of parameters, creating the cryptographic foundation for a truly verifiable AI layer in Web3.

Context

Prior to this work, verifiable computation for LLMs was bottlenecked by the requirement to prove the entire forward pass computation within a single, enormous zero-knowledge circuit, making the proving time and memory usage scale linearly with the total number of model parameters. This constraint rendered ZK-LLM inference impractical, forcing developers to either use tiny, non-competitive models or sacrifice the zero-knowledge property entirely, thus maintaining the centralized trust assumption on the model provider.

Analysis

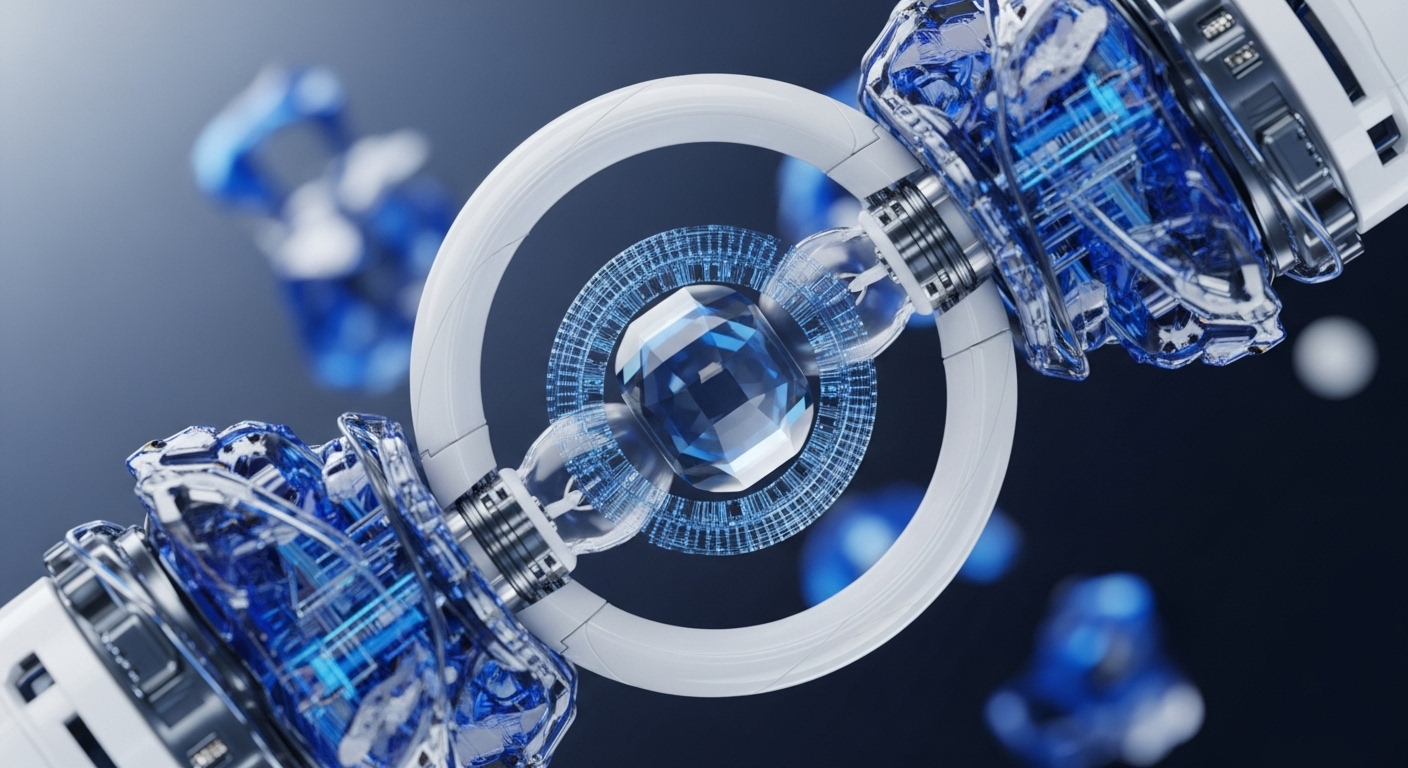

The IVC scheme operates by transforming the commitment structure of the LLM’s weights. Conceptually, instead of a single, monolithic commitment to the entire model, the IVC creates a series of interlocking, constant-size commitments. The prover first commits to the initial state, which is the model weights. For each subsequent transformer layer, the prover generates a small, succinct proof that the output of the current layer is correct relative to the commitment of the previous layer’s state.

The new commitment then cryptographically incorporates the proof of the previous step. This layer-by-layer, incremental verification process replaces one massive, intractable circuit with a sequence of small, manageable circuits, drastically reducing the required computational resources and enabling efficient recursion.

Parameters

- Prover Time Reduction → $98%$ – Reduction in the computational time required to generate the full ZK proof for a 7-billion parameter LLM inference compared to monolithic ZK-SNARKs.

Outlook

The immediate next step is the implementation of the IVC primitive within a production-grade ZK-EVM environment to benchmark its performance on real-world transformer architectures. Strategically, this breakthrough unlocks a new class of decentralized applications where the integrity of AI-generated content and financial decisions is mathematically guaranteed. In the next 3-5 years, this will enable fully on-chain AI agents whose actions are verifiably correct, secure decentralized inference marketplaces, and a new privacy-preserving standard for all large-scale verifiable computation beyond just AI.

Verdict

The Incremental Vector Commitment primitive is a foundational cryptographic innovation that resolves the scalability crisis for trustless AI, enabling the systemic integration of provable computation into decentralized systems.