Briefing

The core problem in blockchain-secured Federated Learning (FL) involves balancing the high computational cost of Proof-of-Work and the centralization risk of Proof-of-Stake against the privacy vulnerabilities inherent in learning-based consensus mechanisms. This research introduces the Zero-Knowledge Proof of Training (ZKPoT) consensus, a foundational breakthrough that leverages zk-SNARKs to allow participants to cryptographically prove the correctness and quality of their local model contributions without disclosing sensitive training data or model parameters. This new mechanism fundamentally re-architects decentralized machine learning, as its single most important implication is the creation of a robust, scalable, and fully privacy-preserving ecosystem where collaborative AI model development can be verified trustlessly on-chain.

Context

Before this work, attempts to secure Federated Learning on a blockchain were constrained by the established limitations of traditional consensus. Proof-of-Work protocols were prohibitively expensive, while Proof-of-Stake risked centralizing model control among large stakeholders. A critical theoretical limitation emerged with learning-based consensus, where the necessary sharing of model gradients or updates for verification inadvertently exposed the underlying private data, creating a critical, unsolved privacy-utility trade-off that hampered real-world adoption in sensitive sectors like healthcare.

Analysis

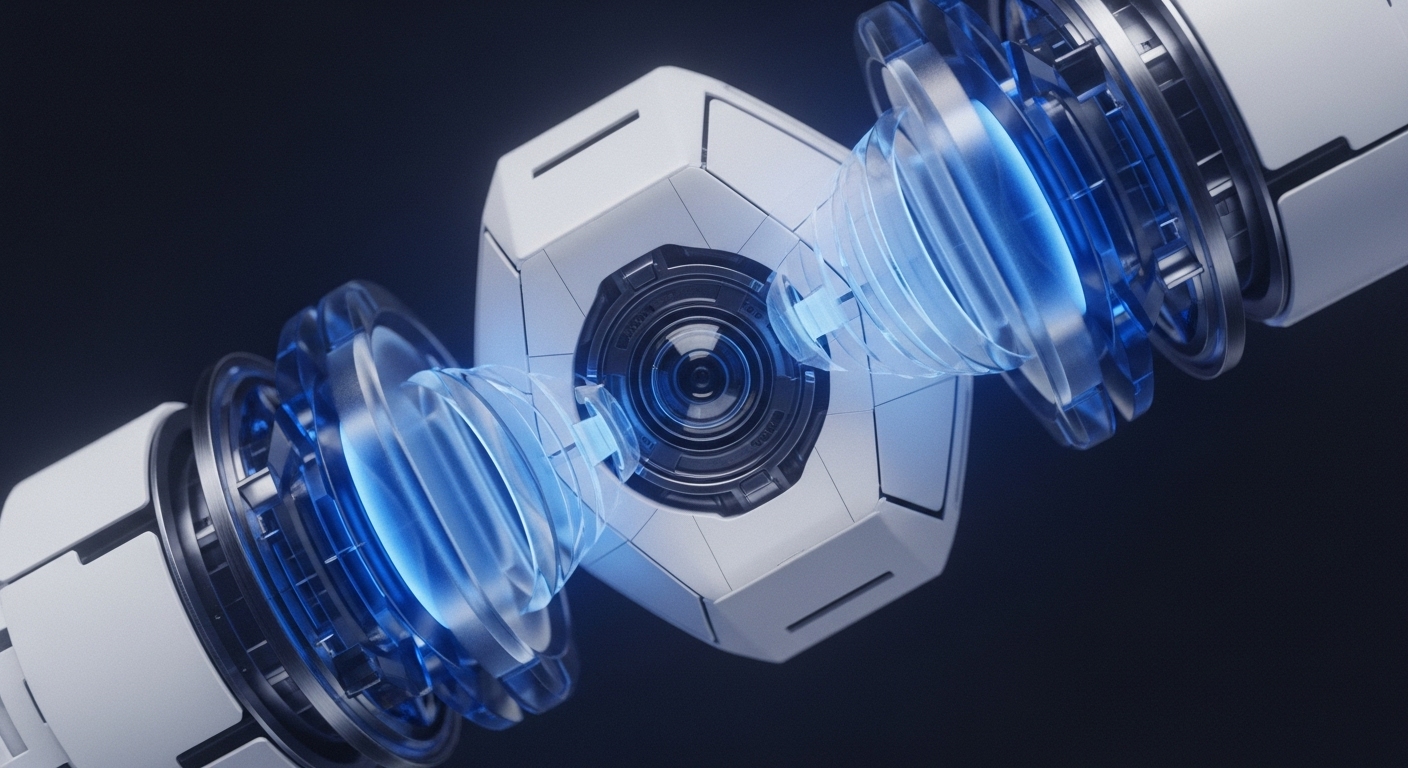

The ZKPoT mechanism operates by reframing the consensus task from a computational puzzle or a staking contest to a verifiable computation problem. The core logic involves a client training a local model and then generating a zk-SNARK proof that attests to a specific, verifiable metric, such as the model’s accuracy against a public test set. This proof, which is succinct and non-interactive, is submitted to the blockchain for verification. This process fundamentally differs from previous approaches because it verifies the integrity of the computation and quality of the result rather than the computational effort or economic stake , thereby eliminating the need to expose the private model weights for on-chain scrutiny.

Parameters

- Model Accuracy Preservation → Achieved without the accuracy degradation typically associated with differential privacy methods.

- Byzantine Resilience → The framework maintains stable performance even with a significant fraction of malicious clients.

- Privacy Defense → The use of ZK proofs virtually eliminates the risk of clients reconstructing sensitive data from model parameters.

Outlook

The ZKPoT theory opens new avenues for the convergence of decentralized AI and cryptoeconomic systems. Future research will focus on optimizing the zk-SNARK circuit design for complex, high-dimensional machine learning models and integrating ZKPoT into decentralized autonomous organizations (DAOs) to govern shared AI infrastructure. Within 3-5 years, this foundational work could unlock a new class of private, verifiable, and globally-scaled AI services, enabling trustless data marketplaces and collaborative research platforms in highly regulated industries.

Verdict

The Zero-Knowledge Proof of Training consensus is a critical foundational primitive that resolves the long-standing privacy-efficiency trilemma for decentralized machine learning systems.