Briefing

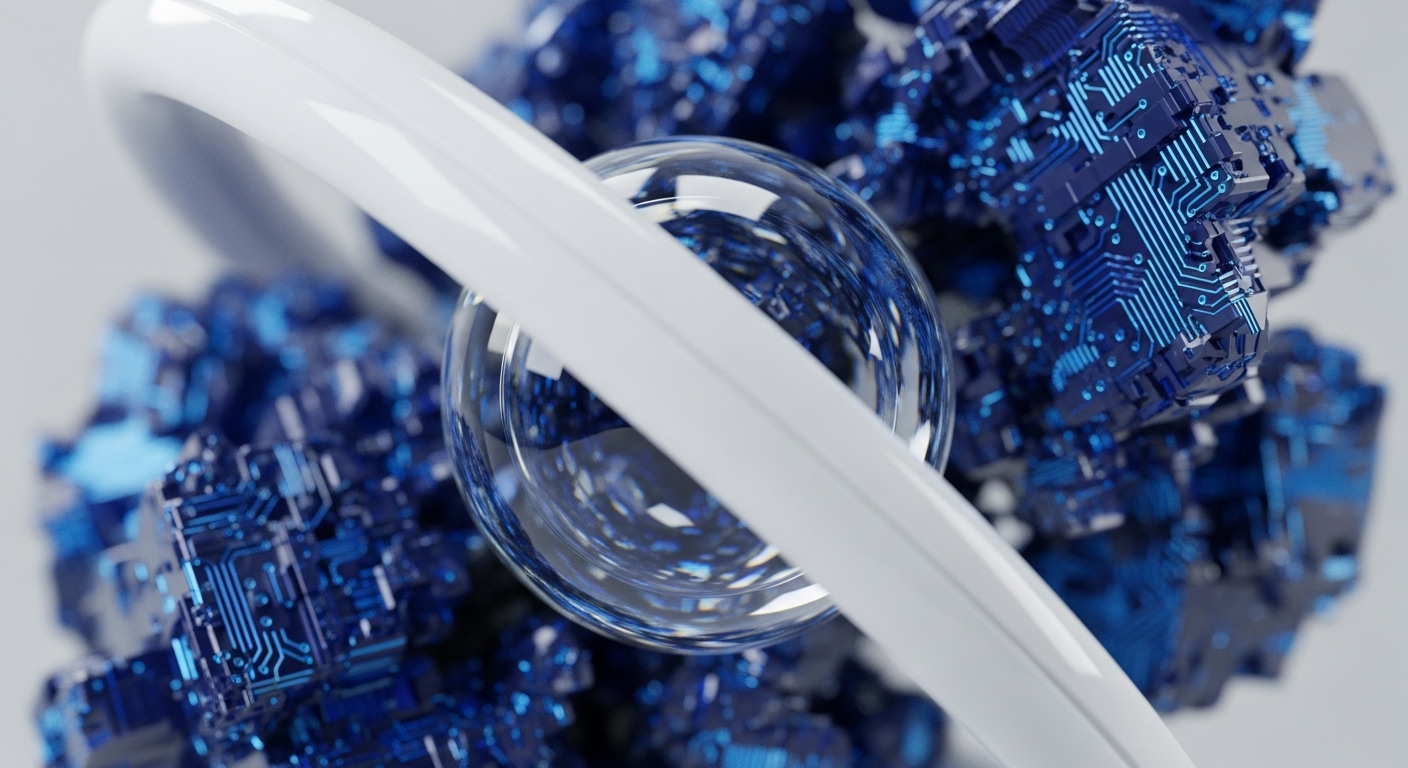

The fundamental problem of blockchain-secured Federated Learning is the inability to simultaneously ensure both the efficiency of consensus and the privacy of participant data. This research introduces the Zero-Knowledge Proof of Training (ZKPoT) consensus mechanism, a novel primitive that utilizes zk-SNARKs to cryptographically verify the correctness and performance of a participant’s model update without requiring the disclosure of the underlying training data or model parameters. This foundational innovation establishes a new security baseline for decentralized artificial intelligence, ensuring that model integrity and data privacy are maintained concurrently, thereby unlocking the potential for truly trustless and globally collaborative machine learning networks.

Context

Prior to this work, blockchain-secured Federated Learning systems were forced to rely on traditional consensus protocols like Proof-of-Work or Proof-of-Stake, which are either computationally prohibitive or inherently risk centralization by favoring large stakers. Attempts to use learning-based consensus mechanisms to save energy introduced a critical vulnerability, as the sharing of model gradients and updates could inadvertently expose sensitive, proprietary training data, creating an unsolvable trade-off between network efficiency and data confidentiality.

Analysis

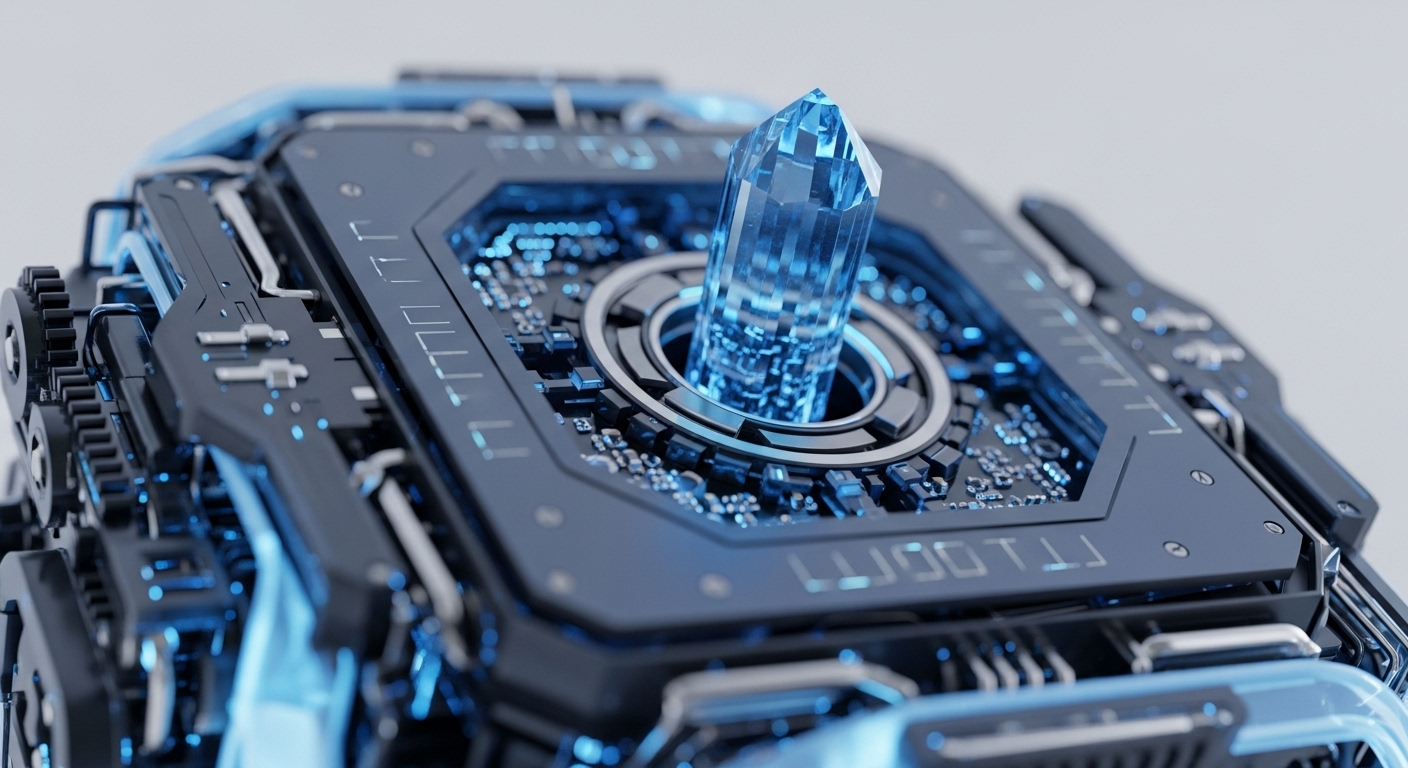

The ZKPoT mechanism operates by transforming the model training process into a mathematical statement that can be proven via a zk-SNARK. Instead of submitting the model update itself, the participant generates a succinct, non-interactive cryptographic proof attesting to two facts → the model was trained correctly according to the protocol rules, and the resulting model achieved a verifiable performance metric. This fundamentally differs from previous approaches because the network’s consensus process verifies a cryptographic proof of contribution rather than the contribution data itself, decoupling the validation of work from the revelation of sensitive information.

Parameters

- Byzantine Attack Robustness → The system is robust against privacy and Byzantine attacks, maintaining security across untrusted parties.

- Accuracy Maintenance → Maintains model accuracy and utility without trade-offs, unlike other privacy-preserving schemes.

- Communication Efficiency → Significantly reduces communication and storage costs compared to traditional consensus and FL methods.

Outlook

The introduction of ZKPoT immediately opens a new research avenue for cryptographically-enforced, incentive-compatible mechanisms within decentralized AI. In the next three to five years, this principle will enable the deployment of commercial-grade, multi-party data collaboration platforms where competing entities can train on combined private datasets without exposing proprietary information. Future research will focus on optimizing the proving time for increasingly large machine learning models and formally integrating these proofs into general-purpose smart contract execution environments.

Verdict

The Zero-Knowledge Proof of Training is a foundational cryptographic primitive that resolves the privacy-utility dilemma for decentralized machine learning, securing a new class of global AI systems.