Briefing

The foundational problem in integrating machine learning with blockchain systems is the trade-off between verifiable contribution and data privacy, as traditional consensus methods either compromise sensitive training data or introduce excessive computational overhead. This research introduces the Zero-Knowledge Proof of Training (ZKPoT), a novel consensus mechanism that uses zero-knowledge succinct non-interactive arguments of knowledge (zk-SNARKs) to allow participants to cryptographically prove the integrity and accuracy of their model updates without revealing the underlying model parameters or private training datasets. This breakthrough establishes a new primitive for decentralized AI, providing a path toward scalable, trustless, and privacy-preserving machine learning models whose training process is secured by the immutability of the ledger.

Context

Prior to this work, blockchain-secured Federated Learning (FL) systems relied on conventional consensus mechanisms like Proof-of-Work (PoW), which is energy-intensive, or Proof-of-Stake (PoS), which risks centralization due to stake concentration. Learning-based consensus, an alternative that replaces cryptographic tasks with model training, inherently introduced privacy vulnerabilities, as the sharing of gradients and model updates could expose sensitive information through attacks such as model inversion or membership inference. The prevailing theoretical limitation was the inability to simultaneously achieve verifiable computational integrity and complete privacy for the training data within a decentralized, trust-minimized setting.

Analysis

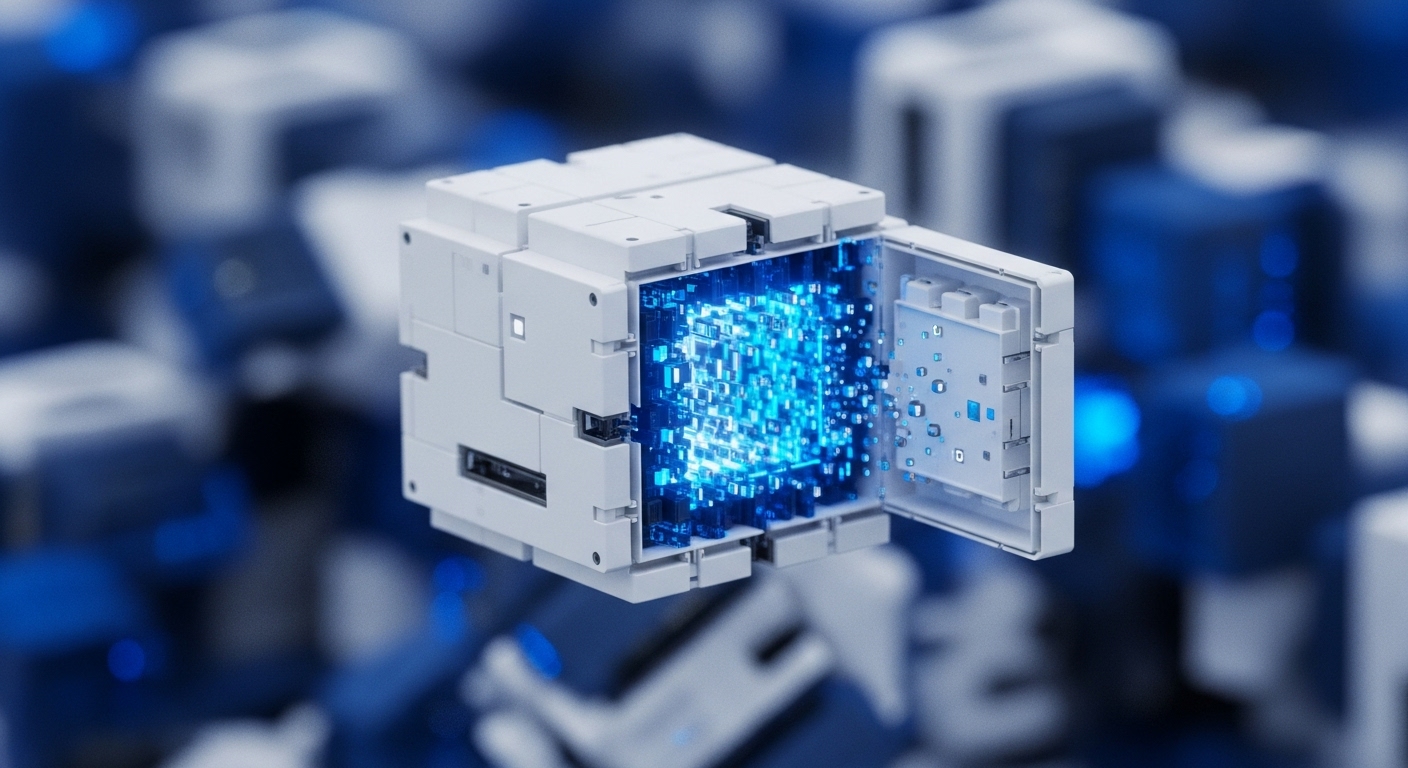

The core idea of ZKPoT is the cryptographic decoupling of proof of work from disclosure of data. The mechanism is anchored in the zk-SNARK protocol, which enables a client (the prover) to generate a succinct proof confirming that a specific computation → the model training and accuracy evaluation against a public test set → was performed correctly. The crucial innovation is the use of an affine mapping scheme to quantize the floating-point model parameters into integers, making the computation compatible with the finite fields required by zk-SNARKs.

This proof, which is then stored on the blockchain, serves as the consensus vote. Validators verify the proof’s validity, which is computationally inexpensive, and accept the model update based on the proven accuracy, thereby eliminating the need to inspect the model parameters or the private training data.

Parameters

- Core Cryptographic Primitive → zk-SNARK (Zero-Knowledge Succinct Non-Interactive Argument of Knowledge), which is used to generate the cryptographic proof of training integrity.

- Performance Metric → ZKPoT consistently outperforms traditional mechanisms in both stability and accuracy across FL tasks, demonstrating resilience against Byzantine faults.

- Privacy Defense → The use of ZK proofs virtually eliminates the risk of clients reconstructing sensitive data from model parameters, significantly reducing the efficacy of membership inference and model inversion attacks.

- Storage and Communication → The system integrates IPFS to streamline the FL and consensus processes, significantly reducing the communication and storage costs associated with large model updates.

Outlook

This research opens new avenues for the deployment of decentralized autonomous organizations (DAOs) and protocols that rely on collective, verifiable machine learning. In the next three to five years, ZKPoT is expected to be a foundational component for private on-chain AI governance, decentralized data marketplaces where data contributors are compensated based on provable model impact, and new classes of private DeFi applications whose risk models are trained on confidential data. The immediate next steps for the academic community involve optimizing the prover time for the complex, high-dimensional computations inherent in deep learning models and exploring its application in asynchronous, large-scale distributed systems.