Briefing

This research introduces the Zero-Knowledge Proof of Training (ZKPoT) consensus mechanism, a foundational breakthrough addressing the inherent tension between privacy, efficiency, and decentralization in blockchain-secured federated learning systems. It leverages the zero-knowledge succinct non-interactive argument of knowledge (zk-SNARK) protocol to enable participants to cryptographically prove the validity and performance of their local model contributions without disclosing sensitive training data or model parameters. This innovation fundamentally transforms how decentralized AI systems can achieve verifiable collaboration, promising a future where large-scale machine learning models can be collectively trained with robust privacy guarantees and enhanced security against malicious actors, thereby unlocking new paradigms for secure and scalable on-chain intelligence.

Context

Prior to this research, blockchain-secured federated learning systems faced a critical dilemma → existing consensus mechanisms, such as Proof-of-Work (PoW) and Proof-of-Stake (PoS), presented significant limitations. PoW, while secure, is computationally prohibitive and energy-intensive, rendering it unsuitable for efficient, large-scale FL deployments. PoS, conversely, improves energy efficiency but introduces centralization risks by favoring participants with substantial stakes, thereby compromising the decentralized ethos of blockchain.

Furthermore, nascent learning-based consensus approaches, while aiming to reduce cryptographic overhead by integrating model training, inadvertently created new privacy vulnerabilities, exposing sensitive information through gradient sharing and model updates. This established a clear theoretical and practical challenge → how to achieve a truly private, efficient, and decentralized consensus for collaborative AI.

Analysis

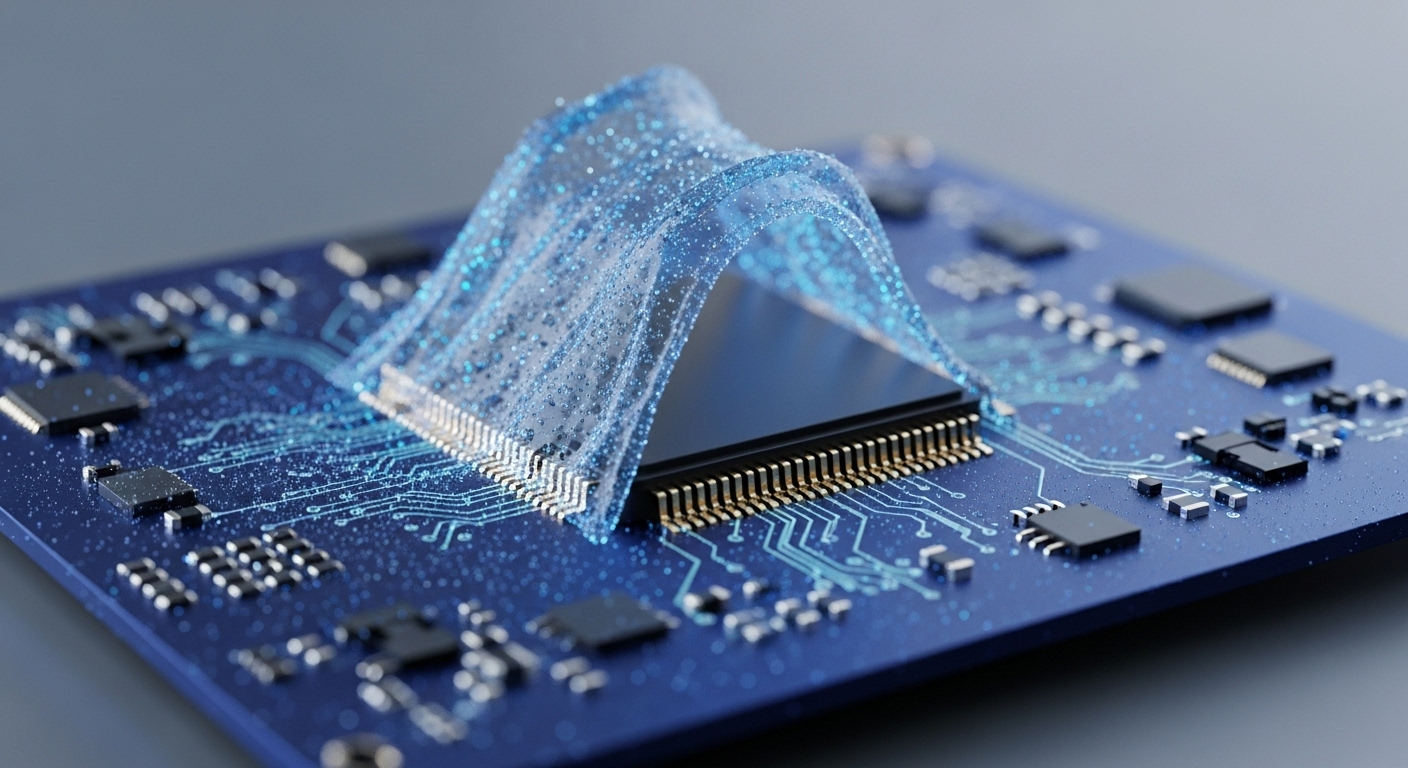

The core mechanism of ZKPoT lies in its innovative application of the zk-SNARK protocol to the federated learning consensus process. In this model, instead of relying on resource-intensive computations or stake-based validation, participants generate a cryptographic proof demonstrating the accuracy and correctness of their locally trained model’s performance against a public test dataset. This proof, a zk-SNARK, is succinct and non-interactive, allowing any network node to verify the integrity of the participant’s contribution without needing access to the actual model parameters or the private training data.

The ZKPoT mechanism fundamentally differs from previous approaches by decoupling verifiable contribution from data disclosure, ensuring that participants can prove their honest engagement and model quality while preserving the confidentiality of their proprietary information. The system integrates a ZKPoT-customized block and transaction structure, complemented by IPFS for efficient storage of proofs and model updates, thereby optimizing communication and storage overhead.

Parameters

- Core Concept → Zero-Knowledge Proof of Training (ZKPoT)

- Key Technology → zk-SNARK Protocol

- Problem Addressed → Privacy, efficiency, and decentralization in federated learning consensus

- Authors → Tianxing Fu, Jia Hu, Geyong Min, Zi Wang

- Integration Component → IPFS for data storage

Outlook

This research opens significant avenues for the future of decentralized artificial intelligence and blockchain architecture. The ZKPoT mechanism is poised to unlock new capabilities for privacy-preserving collaborative machine learning across various industries, from healthcare to finance, where data confidentiality is paramount. In the next 3-5 years, this theoretical framework could lead to the development of robust, scalable blockchain networks capable of hosting complex AI training processes without compromising user data or system integrity. It also establishes a fertile ground for further academic inquiry into optimizing zk-SNARK generation for complex machine learning models, exploring new incentive mechanisms for ZKPoT participants, and extending this verifiable computation paradigm to other distributed AI tasks, ultimately fostering a more trustworthy and efficient digital ecosystem.